When moderators become the thought police…

15 minute read

February 7, 2022, 10:00 AM

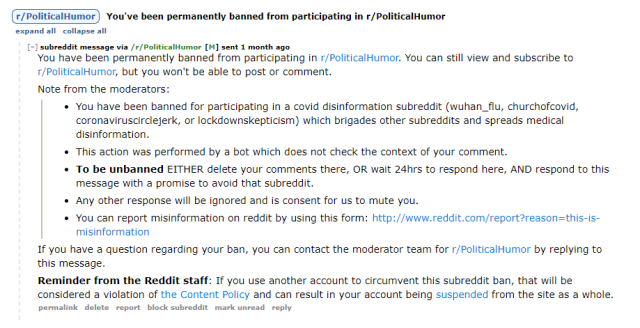

A few weeks ago, imagine my surprise one morning to find this in my Reddit inbox:

Apparently, I was given a permanent ban on a subreddit that I had never participated in because I was a participant in another, unrelated subreddit (/r/LockdownSkepticism in my case) that the moderators of /r/PoliticalHumor didn’t like because it questioned the mainstream narrative regarding mitigation measures for COVID-19. I laughed it off, specifically because I had never participated in it. I took a look at it just out of curiosity, and discovered that it was full of low-quality and low-effort political memes. I quickly came to realize that I wasn’t missing anything by being banned. So, no big loss there.

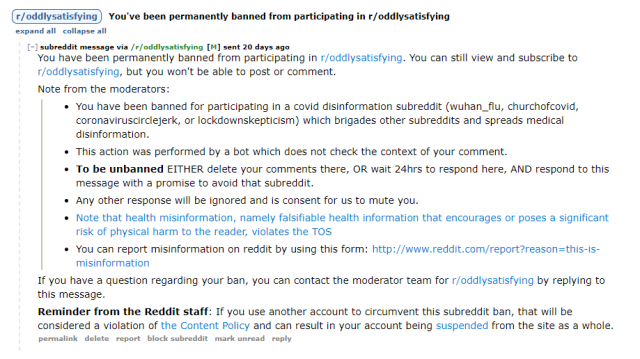

Then a few days later, I got this:

I also got identical ban messages from /r/atheism and from /r/pics a few days after that. Note the additional line about “health misinformation” in this version compared to the one from /r/PoliticalHumor. Those three hurt a little bit, because those were subreddits that I actually participated in, and never discussed anything COVID-related there because it wasn’t relevant (i.e. I was behaving the way that one was supposed to behave on Reddit). But after getting ban messages from all three of them solely because of my participation in another, unrelated subreddit, I unsubscribed from all three. If that’s how they’re going to be, then the hell with all of them.

In any event, a few things stuck out to me in the moderator notes:

This action was performed by a bot which does not check the context of your comment.

In other words, all that this bot did was look at who participated on the subreddits determined to be “bad” and then banned them on sight from the subreddit in question. In other words, because you participate in this subreddit that we disagree with, you are not welcome in this other, completely unrelated subreddit, i.e. banning for suspicion of “wrongthink”.

To be unbanned EITHER delete your comments there, OR wait 24hrs to respond here, AND respond to this message with a promise to avoid that subreddit.

Any other response will be ignored and is consent for us to mute you.

This is so childish and cliqueish. It comes off as, “We don’t like this group of people, and as long as you associate with those people, we don’t want anything to do with you, and we think that you’ll actually do this because we’re the ‘cool kids’ (i.e. moderators of a larger subreddit).” This is like elementary school level stuff here. It actually reminds me of the stuff that my fifth grade teacher would do to me, where she would ostracize me from the rest of the class, and then pledge to keep me separated until I either wrote some sort of confession the way that she wanted me to (regardless of whether or not it corresponded with reality), or made me promise to behave a certain way.

As far as unbanning went, when I discussed these bans on another lockdown-critical subreddit, a commenter said that when they played along and promised not to participate, a moderator removed their ban, but then the bot went right behind the human moderator and reinstated the ban a few hours later. In other words, the entire thing is an exercise in futility, because the promises of unbanning if the recipient complies with certain demands are empty. I figure that either (A) the bot is so poorly programmed that it cannot be controlled, or (B) the people running the subreddits are completely clueless about how to control the bot that they have let loose on other people’s subreddits. Whichever scenario it actually is, it’s not a good look.

Note that health misinformation, namely falsifiable health information that encourages or poses a significant risk of physical harm to the reader, violates the TOS

This is the part where I feel like they are admitting that they know that what they are doing is wrong, or at least questionable, and are attempting to justify their actions to themselves. As I see it, this is where they’re admitting that they’re acting outside of the scope of their authority as subreddit moderators. I’ve always considered enforcement of the Reddit user agreement to be above my pay grade in my role as a subreddit moderator (Reddit moderators are not compensated for their service). The scope of a Reddit moderator’s responsibility is to moderate their own subreddits, and ensure that their own subreddits run smoothly in accordance with a few governing documents. Enforcement of the user agreement is outside of that scope as far as I’m concerned. Reddit has actual paid staff whose job it is to interpret the user agreement and issue sanctions based on it. It’s not up to unpaid volunteers to handle these sorts of matters.

From everything that I can tell, despite that these subreddits attempt to claim the moral high ground when making their bans by citing the Reddit user agreement, I am fairly confident that these sorts of bans, as implemented, are violative of the same. Section 8 of the document governs moderators, and defines the responsibilities of moderators. The first one codifies another document as part of the user agreement:

You agree to follow the Moderator Guidelines for Healthy Communities;

Jumping over to that document, which functions as an addendum to the user agreement, a few passages stick out for me, which, when taken together, make a fairly convincing case that this banning practice was a violation of the Reddit user agreement:

Engage in Good Faith: Healthy communities are those where participants engage in good faith, and with an assumption of good faith for their co-collaborators. It’s not appropriate to attack your own users. Communities are active, in relation to their size and purpose, and where they are not, they are open to ideas and leadership that may make them more active.

This one, regarding engaging in good faith, is more overarching in nature. By banning users based on participation in another subreddit via an automated service, it shows anything but engagement in good faith. It categorically assumes that anyone who participates in a given subreddit is “bad” and will cause trouble on other subreddits. That is an exceptionally broad brush to paint with. Every subreddit has the ability to attract bad actors, and my experience with /r/NoNewNormal showed that even with a relatively controversial subreddit, there are plenty of good-faith participants there. I imagine that /r/PoliticalHumor, /r/oddlysatisfying, /r/atheism, and /r/pics, being relatively large subreddits, have their fair share of bad actors as well. But no one, at least to my knowledge, has gone and banned users that participate in those subreddits categorically, because that creates a lot of collateral damage and affects many innocent people. I don’t go on Reddit to harass people, and I participate on /r/LockdownSkepticism in order to comment on the situation with (at least somewhat) like-minded people, and get emotional support for the same. I have better things to do than to stir up trouble.

Clear, Concise, and Consistent Guidelines: Healthy communities have agreed upon clear, concise, and consistent guidelines for participation. These guidelines are flexible enough to allow for some deviation and are updated when needed. Secret Guidelines aren’t fair to your users—transparency is important to the platform.

It is worth noting that nowhere in any of these subreddits’ rules does it state that participation in certain outside subreddits is a disqualifier for participation in the subreddits in question. /r/pics states in its rules that it has a zero-tolerance policy for COVID misinformation, but at least in the case of /r/LockdownSkepticism, there is no misinformation, unless you consider questioning the narrative and offering emotional support to other participants to be in and of itself misinformation. However, I suspect that the term “COVID misinformation” is deliberately being interpreted in an overly broad way to include any criticism of the “official” narrative, which to me comes off as kind of “thought police” (and in which case, there are other issues at play if simply questioning the narrative is considered to be misinformation). However, even then, there is nothing that explicitly states that participation in certain other subreddits is an automatic bar to participation – thus any sort of categorical ban like we’ve observed appears to be a “Secret Guideline”, and is not permitted per the guidelines. If this were disclosed, that would be one thing, but since it’s not, it’s violative.

Appeals: Healthy communities allow for appropriate discussion (and appeal) of moderator actions. Appeals to your actions should be taken seriously. Moderator responses to appeals by their users should be consistent, germane to the issue raised and work through education, not punishment.

This is problematic because of the narrowly-defined scope for appeal messages. According to the ban message, users have only a few options when it comes to appealing this ban. If you want your ban removed, you have to either delete all of your contributions to the subreddits in question, or sit out for 24 hours. Then, in either case, you must then message the subreddit’s moderators and beg them to remove your ban, and promise never to participate in the named subreddits. They also leave no room for discussion about the ban, as any other response other than begging for reinstatement and promising that you will never associate with those big, bad meanies ever again will be ignored and cause your conversation to be muted. And, of course, that’s no guarantee that the bot won’t come right behind the human moderator and reinstate the ban automatically after all of that. All of that seems to run entirely contrary to what is discussed in the document discussing moderator operation.

Management of Multiple Communities: We know management of multiple communities can be difficult, but we expect you to manage communities as isolated communities and not use a breach of one set of community rules to ban a user from another community. In addition, camping or sitting on communities for long periods of time for the sake of holding onto them is prohibited.

This one is pretty straightforward: each subreddit is to be treated as if it was an island. What happens in one subreddit is to have no bearing on the management of another subreddit. I moderate a bunch of subreddits, including four about retail that are closely related to each other and promoted as a group. If someone causes disruption on one, I ban their account on that one. I don’t ban the account on all four subreddits because (A) that’s too much work, and (B) I don’t want to promote a subreddit to a bad actor that they may not have previously known about via a ban message. After all, multiple accounts are a thing, and that might just open the door for more moderator work that I already don’t get any compensation or thanks for. Therefore, in this case, a user’s participation on /r/LockdownSkepticism, per the moderator document, is to have no bearing on someone’s participation in /r/pics, unless an individual user specifically causes disruption over there, i.e. don’t categorically ban participants in one subreddit on another, unrelated subreddit.

Taken together, these subreddits are clearly flouting their responsibilities under the user agreement, while claiming to protect the same. Not a good look.

I did some research into these sorts of bans, and it would appear that the bot responsible for is a service called Saferbot, which describes itself as “a third-party tool created for use on Reddit that allows moderators to define rules that mitigate the effects of brigades and harassing subreddits,” and “a highly specialized moderation bot, filling a large gap in Reddit’s functionality and policy.” According to its documentation, it “scans content posted to harassing subreddits on your list and bans producers of that content from your community.” In other words, it indiscriminately bans people from participating in one subreddit by virtue of their participation in another subreddit. I suspect that the designer of this bot, a user named yellowmix, knows that their tool violates Reddit’s user agreement, based on this passage:

Saferbot is a moderation bot performing moderator duties. Moderators can ban any user they want for any reason, including no reason. Saferbot, however, has a very good reason. In addition to protecting your community, preventing harmful users from harassing helps them from breaking the site-wide anti-harassment and vote manipulation policies. Saferbot makes everyone safe!

If you follow the links that the author includes, you will see that they are relying on very flimsy evidence to justify their bot’s existence. The first link points to a Wikipedia article about the AOL Community Leader Program. I question what that has to do with anything to do with Reddit, considering that Reddit was created after the AOL Community Leader Program was discontinued. Additionally, the article doesn’t discuss anything related to banning users, even in the context of that program, let alone Reddit. The second link goes to a comment on the /r/help subreddit. The comment is from a Reddit admin, so it is “word of god” as far as that goes, however, seeing as the comment was posted in June 2013, I suspect that the advice given is dated to the point of being of no use today, considering that the moderator document was added to the user agreement on June 8, 2018. In any event, I suspect that this statement is the bot author’s attempt to justify their bot’s existence to themselves more than anything else, because it’s clear that this bot is being used as a way to excommunicate users who post in certain subreddits (the Reddit equivalent of “cancel culture“, if you will), in clear violation of the Reddit user agreement.

Another problem, though this is not unique to Reddit by any means, but a strong driver of all of this, is the extreme polarization of the population on how to handle COVID-19 mitigation measures. I’ve made my opinions about the pandemic very well known in this space, so I don’t feel the need to repeat them here, but I generally fall under the category of acknowledging that COVID-19 is a serious public health concern, but also viewing many of the mitigation measures that the public has been mandated to take as unreasonable. In other words, the CDC can make whatever recommendations it wants, but not all of them are reasonable as a matter of actual public policy to be implemented by elected officials, vs. remaining as recommendations that would be good to do, and not mandated by government order. That puts me at odds with a lot of people, and I am well aware of it. In the last two years, too many people have shown just how intolerant and judgmental they can be to differing opinions. According to these folks, anyone who does not tow the CDC line exactly is selfish and deserves to be ostracized and shamed. There were people who I used to think were perfectly reasonable prior to 2020 whom I now can’t take seriously anymore because they’ve shown their true colors and demonstrated exactly how judgmental and openly hostile about differences in opinion that they actually are. A lot of this has also come from the “question authority” set, which I find quite ironic. Unsurprisingly, this has spilled over onto Reddit, where it’s just been amplified by the nature of the platform.

Ultimately, though, this particular situation comes down to the way that Reddit management runs the service. In the time that I’ve spent on Reddit, I’ve noticed that while the folks there are pretty skilled when it comes to technical matters, they are remarkably incompetent when it comes to dealing with people, and have let the moderators of big subreddits command much power when it comes to the way that the site is run. The demise of /r/NoNewNormal is a perfect example of this, and demonstrates a lot of what is wrong with Reddit’s governance. In the case of /r/NoNewNormal’s eventual demise, Reddit founder and CEO Steve Huffman posted a statement stating, “Dissent is a part of Reddit and the foundation of democracy. Reddit is a place for open and authentic discussion and debate. This includes conversations that question or disagree with popular consensus. This includes conversations that criticize those that disagree with the majority opinion. This includes protests that criticize or object to our decisions on which communities to ban from the platform.” Following this, the moderators of about 135 larger subreddits set the subreddits that they moderated to “private”, essentially “going dark” in protest of this move on the part of Reddit management. I consider it the equivalent of a child’s throwing a temper tantrum until they get what they want. It was also something of a denial-of-service attack, but done from the inside rather than externally. Reddit management responded to this electronic equivalent of a temper tantrum by giving the moderators exactly what they wanted, and banned /r/NoNewNormal. I’ve stated in this space before that banning /r/NoNewNormal was ultimately the right thing to do for a number of reasons, but I’ve always thought that while it came to the right conclusion, the method by which it got there was highly problematic. By capitulating to the moderators of larger subreddits, it showed that the “go dark” tactics worked as a way of bullying Reddit management into doing whatever they wanted them to do. It handed the power over the site from the paid staff of Reddit to the anonymous, unpaid moderators of the large subreddits. They were confirmed to have real power over the governance of the site, because by the management’s capitulating so quickly to their demands when the big subreddits went dark, they indicated that the site can’t exist without those subreddits, and that those moderators apparently own those subreddits.

Additionally, the existence of and subsequent use of Saferbot as a way of filling in alleged gaps in Reddit policy and site functions speaks to the way that these moderators feel as though they can “take the law into their own hands” and do things that the owners of the site have not provided for either via technical means or through policy is proof that the inmates now run the asylum, especially when Reddit management fails to intervene when these overreaches occur. I have always taken the stance that what is viewed as an omission or gap in policy and functionality may exist for a reason, and therefore understanding the “why” behind why things are as they are goes a long way in determining a course of action. In other words, make sure that you have a complete understanding of why something is the way that it is before working around it or otherwise changing it. When you take the law into your own hands and create policies that extend well beyond the subreddits that you are entrusted to moderate, you’re essentially saying that you know better than the people who actually own the space, even if they have delegated the day-to-day moderation of it to you. That is very problematic as far as I’m concerned.

With all of this said, what do you do? Reddit is a very user-driven space. Without the users, there is just an empty framework intended to house content. Reddit management provides the space, and they also fund and maintain the infrastructure behind it, but they do very little when it comes to management of people. They tend to take a hands-off approach, and only get involved when they absolutely have to, much to the site’s detriment. Subreddit moderators have very wide latitude to shape the user experience on the site. It has been demonstrated quite thoroughly that the relationship between the site management and the big moderators has gotten out of balance, and it’s really time for Reddit management to rein those moderators in, and reassert itself as the one that is ultimately in charge. A management team that is able to be successfully bullied by the big moderators is not going to be very effective at anything, because if the big moderators don’t like it, they will just pitch a fit and the management will capitulate and give them whatever they want. Capitulating was a major mistake on their part, because it firmly tipped the balance of power toward the unpaid moderators and away from the site’s actual management. I’ve always been under the impression that Reddit management owned all of the subreddits, and that the moderators were volunteers who were delegated responsibility for running the site on behalf of Reddit management. But when the moderators of these subreddits – subreddits that were clearly essential to the success of the site – decided to stop playing ball in protest of their decision, Reddit management bowed to these moderators when they attempted to deny them parts of their own service. So who is really in charge? The moderators. They wanted a subreddit gone, and they got exactly what they wanted from the management. I suspect that a better course of action would have been for Reddit management to very publicly assert that Reddit was their website, and that they were in charge of it. The truth is that they should have terminated the moderation roles of every single user who participated in that protest, and blacklisted them from moderating anything else in the future. If they refused to perform the roles that they volunteered to do, then there’s no sense in continuing to allow them to have the access – especially when they have demonstrated that they were willing to abuse it.

I would also suggest that Reddit should also implement some significant policy changes in order to reduce the overall influence of the big moderators, and limit their ability to negatively affect the user experience. Subreddit moderators probably have too much authority right now, and it needs to be reeled in. First, subreddit moderators should not be able to issue indefinite (“permanent”) bans to users. An indefinite ban from any part of the service, especially for users with a paid subscription (which I have had since 2013), should be above the pay grade of any moderator. The ability to issue a ban of a finite duration, such as 90 days, six months, or a year, is reasonable for a moderator, but anything longer than a year, and especially an indefinite ban, should be the sole prerogative of Reddit management, and only used in extreme cases. That would curb abusive services like Saferbot, which were used to ostracize users with differing opinions, because the damage that it causes would be automatically reversed over time. As it stands now, those COVID-related ostracization bans will remain on the books for many years, well after the pandemic is just a footnote in history, unless they are manually reversed. Reddit management currently reserves the ability to suspend or ban accounts sitewide as their sole prerogative, but this should be expanded to include any indefinite bans.

The other big policy change that I would implement would be tighter regulation of bots. Wikipedia has a policy that sets out certain requirements for bots, and requires manual review and approval of all bots before they are allowed to operate on the site. It is time for Reddit management to do likewise, and set minimum standards for bots, and require approval to ensure that those standards are met. Reddit currently does not regulate bots, and as such, you have harmful and/or abusive bots like Saferbot that operate on the site, countless useless bots that aren’t harmful but are just plain annoying that operate on the site, and some useful bots that operate on the site (but these are few and far between). Then my favorites are bots that check other bots, such as /r/BotDefense, which is designed to stop the useless and annoying bots on the site, while allowing the useful ones to continue operating. Services like /r/BotDefense are essentially a workaround to the lack of formal regulation of bots on Reddit. Formal regulation of bots on Reddit would go a long way towards improving the site experience, and cut down on the possibility of abuse.

For all that I’ve criticized Reddit here, though, some might ask why I don’t just leave Reddit. I find that despite Reddit’s many flaws, there is still a lot of redeeming value there, even if there are some large and influential bad actors. I participate in a lot of smaller, more niche subreddits, and they still have lots of value to me. If I were leaving Reddit, I wouldn’t write more than 4,000 words about it on here. I would just wash my hands of the whole situation and leave, just like how I never wrote on here about my quitting Wikipedia nearly ten years ago. In that instance, I just put a site update on the ticker to let people know that I was no longer participating over there, and moved on. I’m still invested in Reddit, and I want to see them improve. I’m not yet to the point where I consider them beyond saving. And if I can change hearts and minds and cause some positive improvement there, I will.

All in all, hopefully we see some positive improvement in the future, since the Reddit service has a lot of value for the Internet at large, but it has lots of issues to work through when it comes to its governance.